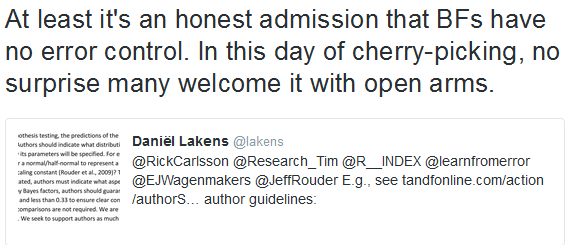

I noticed this exchange on Twitter last week. Daniel Lakens posted a screenshot from the reviewer guidelines of Comprehensive Results in Social Psychology. The guidelines promote Bayes factor analysis, and in particular

accepting a hypothesis when the Bayes factor in favour of that hypothesis is

greater than 3.

I’ve noticed

variations on this argument – that Bayes factor analyses lack error control - floating

around a fair bit over the last while. Being a Bayesian myself (mostly), and

being concerned about making errors in inference, I figured it might be useful

to sort through my thoughts on this issue and post some relevant points.

Point 1: Frequentist significance tests involve decision rules that control error rates; Bayesians report the probability of error

Significance tests - or at least most types of them - involve a decision rule set up in such a way that it will control the rate of some type of error. Almost always, the decision rule will be set up to keep the probability of a Type 1 error (a false rejection of the null hypothesis) to 5%. The decision rule is typically intended to be a rigid and intrinsic part of the analysis: We reject the null hypothesis if p < .05, and do not reject it if not.

On the other hand, in a Bayesian analysis, we don't necessarily have to apply some firm decision rule. A Bayesian analysis is capable of providing a statement like "Given the priors specified and data observed, there is an 85% probability that this hypothesis is correct". (A statement which implies, in turn, a 15% chance of being in error if one was to decide in favour of the hypothesis). A researcher reporting a Bayesian conclusion like the one above could leave simply it at that; they could apply a decision rule (e.g., posterior probability > 90% is required to support a hypothesis), but they don't have to. Therefore, because a significance test is essentially a decision rule while Bayes theorem isn't, it is probably best to say that a frequentist analysis seeks to control error rates, while a Bayesian analysis will communicate the error probability (i.e., the probability that a particular conclusion would be incorrect). Both of these approaches seem to be reasonable alternatives to me. But what are we assuming when we report error probabilities in this Bayesian way?

Point 1: Frequentist significance tests involve decision rules that control error rates; Bayesians report the probability of error

Significance tests - or at least most types of them - involve a decision rule set up in such a way that it will control the rate of some type of error. Almost always, the decision rule will be set up to keep the probability of a Type 1 error (a false rejection of the null hypothesis) to 5%. The decision rule is typically intended to be a rigid and intrinsic part of the analysis: We reject the null hypothesis if p < .05, and do not reject it if not.

On the other hand, in a Bayesian analysis, we don't necessarily have to apply some firm decision rule. A Bayesian analysis is capable of providing a statement like "Given the priors specified and data observed, there is an 85% probability that this hypothesis is correct". (A statement which implies, in turn, a 15% chance of being in error if one was to decide in favour of the hypothesis). A researcher reporting a Bayesian conclusion like the one above could leave simply it at that; they could apply a decision rule (e.g., posterior probability > 90% is required to support a hypothesis), but they don't have to. Therefore, because a significance test is essentially a decision rule while Bayes theorem isn't, it is probably best to say that a frequentist analysis seeks to control error rates, while a Bayesian analysis will communicate the error probability (i.e., the probability that a particular conclusion would be incorrect). Both of these approaches seem to be reasonable alternatives to me. But what are we assuming when we report error probabilities in this Bayesian way?

Point 2:

Frequentist and Bayesian approaches are both concerned with the probability of error, but they condition on different things

Frequentist hypothesis

tests are typically aimed at controlling the rate of error, conditional on a particular

hypothesis being true. As mentioned above, a statistical significance test for a

parameter is almost always set up to such that conditional on the null

hypothesis being true, we will erroneously reject it only 5% of the tim.

The situation in a

Bayesian analysis is a bit different. A Bayesian test of a hypothesis produces a posterior probability for that hypothesis. We can subtract this posterior probability from 1 to get an error probability. But this error probability isn't the probability of error, conditional on a particular hypothesis being true; it's the probability that a particular conclusion is incorrect, conditional on the priors specified and data observed.

So we have two types of error probabilities: The probability of error conditional on a hypothesis, and the probability of error conditional on the prior and observed data. Both of these are perfectly reasonable things to care about. I don’t think it’s reasonable for anyone to dictate that researchers should care about only one of these.

For me personally, once I’ve finished collecting data, run an analysis, and reached a conclusion, I’m most interested in the probability that my conclusion is wrong (which is part of the reason why I’m keen on Bayesian analysis). But this doesn’t mean I’m completely disinterested in error rates conditional on particular hypotheses being true: This is something I might take into account when planning a study (e.g., in terms of sample size planning). I’m also broadly interested in how different types of Bayesian analyses perform in terms of error rates conditional on hypotheses. Which leads me to the next point...

Point 3: An

analysis may focus on one type of error probability, but we can still study how it performs in terms the other.

A frequentist

significance test will typically be set up specifically to control the rate of

falsely rejecting the null, conditional on the null hypothesis being true, to

5% (or some other alpha level). But

this doesn’t mean we can’t work out the probability that a specific conclusion is incorrect, conditional on the data observed (i.e., the Bayesian type of error probability). Ioannidis’ paper claiming that most published research

findings are false deals with exactly this problem (albeit based on some shaky assumptions): He calculated the probability that an effect actually exists, conditional on a significant test statistic, for a range of priors and power scenarios. There is

admittedly a bit of a conceptual problem here with trying to draw Bayesian

conclusions from a frequentist test, but the mathematics are fairly

straightforward.

Similarly, while a

Bayesian analysis is set up to tell us the probability that a particular

conclusion is in error, given the priors set and data observed, there’s no

reason why we can’t work out the rate at which a Bayesian analysis with a

particular decision rule will result in errors, conditional on a particular

hypothesis being true. This previous blog of mine is concerned with this

kind of problem, as are these better ones by Daniel Lakens, Alex Etz and Tim van der Zee.

The upshot is that

while a particular type of analysis (frequentist or Bayesian) may be tailored

toward focusing on a particular type of error probability, we can still use a bit of

mathematics or simulation to work out how it performs in terms of the other type. So choosing to focus on controlling one type of error probability hardly implies that a researcher is disregarding the other type of error probability

entirely.

Point 4: The

Bayesian error probability depends on the prior

A word of caution about Bayesian error probabilities: Given its dependence

on a prior, the type of inference and error rate I get with a Bayesian analysis

is useful primarily provided I can set a prior that’s relevant to my

readership. If I set a prior where most of the readers of my study can say “yes,

that seems a reasonable description of what we knew in advance”, and I draw a

conclusion, then the posterior probability distribution tells them something

useful about the probability of my conclusion being in error. But if they

think my prior is utterly unreasonable, then I haven’t given the reader direct

and useful information about the chance that my conclusion is wrong.

Point 5: Back to

that editorial recommendation of support H1 if BF10>3

Finally I want to

reflect on how error probabilities are dealt with in a very specific type of analysis,

which is a Bayes factor analysis using a default prior for the H1 model, and a

decision rule based solely on the Bayes factor rather than a posterior probability

distribution. This is more or less the type of analysis recommended by the “Comprehensive

Results in Social Psychology” editorial policy that started off this whole

discussion.

Now in this case, the

analysis is not set up to control the rate of error, conditional on a particular hypothesis being true, to a specific value (as in a frequentist analysis). However,

because it doesn’t involve a commitment to a particular prior probability that

the null hypothesis is correct, it also doesn’t directly tell us the

probability that a particular conclusion is false, conditional on the data (the

Bayesian type of error probability).

This latter problem is

reasonably easily remedied: The author can specify prior probabilities for the

null and alternate, and easily calculate the posterior probability that each

hypothesis is correct (provided these prior probabilities sum to 1):

Posterior odds = P(H1)/P(H0)

* BFH1

P(H1|Data) = Posterior

odds/(1+posterior odds)

For example, say we're doing a t-test and take an uber-default assumption of P(H0) = P(H1) = 0.5, with a Cauchy (0, 0.707) on standardised effect size under H1 (the JASP default, last time I checked). We then observe a BF10 of 3. This implies a posterior probability of 3/4 = 75% in favour of H1. In other words, if you observed such a Bayes factor and concluded in favour of the alternate, there'd be a 25% chance you'd be in error (conditional on the data and prior specification).

That said, this error probability is only informative to the extent that the priors are reasonable. The prior used here takes a spike-and-slab prior form which implies that there's a 50% chance the effect size is exactly zero, yet a substantial chance that it's extremely large in size (e.g., a 15% prior probability that the absolute true effect size is greater than 3). A reader may well see this prior probability distribution as an unreasonable description of what is known about a given parameter in a given research setting.

So I think it is crucial that authors using Bayes factor analyses actually report posterior

probabilities for some reasonable and carefully selected priors on H1 and H0 (or even a range of priors). If this isn’t

done, the reader isn’t provided with direct information about either

type of error probability. Of course, a savvy reader could assume some priors and work

both types of error probability out. But I think we have an obligation to not leave that entire process to the reader. We should at least give clear

information about one of the types of error rates applicable to our study, if

not both. So I disagree with the reviewer guidelines that provoked this post: We shouldn't make statistical inferences from Bayes factors alone. (If you use Bayes factors, make the inference based on the posterior probability you calculate using the Bayes factor).

Secondly, the priors we set on H0 and H1 (and the prior on effect size under H1) in a Bayes factor analysis should be selected carefully such that they're a plausible description of what was known ahead of the study. Otherwise, we'll get a posterior probability distribution and some information about the probability of error, but this probability of error will be conditional on assumptions that aren't relevant to the research question nor to the reader.

No comments:

Post a Comment