This article has a bit of an unusual origin story. Last year, I was at the Modern Modeling Methods conference in Connecticut, and gave a talk discussing Bayes factor tests of null hypotheses, raising the problem of how they implicitly put a huge spike of prior probability on a point null (which, at the time, seemed somewhat silly to me).

Kyle Lang was in the audience for the talk, and mentioned afterwards that he was putting together a special issue of Research in Human Development about methodological issues in developmental research. He had already put together quite a detailed proposal for this special issue, including an abstract for an article on Bayes factors. He suggested that I write this article, along with whichever collaborators seemed suitable. I reached out to Michael (a fantastic colleague of mine at Massey) and Rasmus (whose work I knew through things like his brilliant Bayesian Estimation Supersedes the t-test (BEST) - online app.

So away we went, trying to write a gentle and balanced introduction to Bayes factors targeted at developmental researchers. (We use Wynn's classic study purportedly showing arithmetic abilities in babies as a running example). I would have to say our article is less a brand-new original contribution to knowledge than the type of introduction to a methodological issue one might often find in a textbook. But as an ECA and member of the precariat, I am definitely not in the habit of turning down invites to publish journal articles!

Now the small group of people who follow me on Twitter, hang out on PsychMAD, and are most likely to be reading this post are hardly likely to need a gentle introduction to Bayes factors; most of y'all run Markov chains before breakfast. And there do already exist some really great general introductions to Bayesian estimation and Bayes factors; Rouder et al. (2009), Kruschke and Liddell (2017), and Etz and Vandekerckhove (2017) are some of my favourites. Tailoring an introduction to a particular sub-discipline is obviously just a small contribution to the literature on Bayesian inference.

That said, there are a few things about our introduction to Bayes factors that are a bit unusual for the genre, and that might persuade some of you that this is a useful introduction to share:

1. No pitchforks raised at frequentists

As one must in these situations, we begin by pointing out some problems with null hypothesis significance testing that might prompt someone to want to try a Bayesian approach (e.g., a p value is a statement about uncertainty that is difficult to intuitively understand or communicate to the public; NHST doesn't deal well with optional stopping; there is an asymmetry within NHST wherein only significant results are regarded as informative; etc.). But we aren't rabid Bayesians about this; some of my favourite Twitter-friends are frequentists, after all. We acknowledge that the identified problems with hybrid NHST don't apply to every form of statistical significance testing (c.f. equivalence testing), and that we are just presenting a Bayesian response to some of these problems (the implicit message being that this is only one way of going about an analysis).

2. Making it clear what Bayes factors are good for, and what they aren't.

The role and importance of Bayes factors within the broader Bayesian church can be a little confusing. By one view, the Bayes factor is just the link between the prior odds and the posterior odds for any two models, so almost any Bayesian analysis uses a Bayes factor in some sense. By another view, running a data analysis focusing on a Bayes factor is a quaint activity undertaken only by that funny sort of Bayesian who thinks point 'nil' hypotheses are plausible.In our article, we draw on Etz and Wagenmakers' excellent historical account of the Bayes factor, mentioning how the factor came about in part as Harold Jeffreys' solution to a particular problem with Bayesian estimation: For some choices of prior, even if a parameter takes some specific hypothesised value (a "general law"), and we collect data and estimate the value of the parameter, we may never be able to collect enough data to produce a posterior probability distribution that suggests that the parameter actually takes this hypothesised value. Jeffreys discussed the problem in relation to non-informative discrete prior distributions, but the problem is especially acute for continuous priors, where the prior probability that the parameter takes any specific point value is zero. In effect, if you want to estimate a parameter where some prior information or theory suggests that the parameter should take some specific point value - such as zero - conventional Bayesian estimation is sort of a pain: You can't just place a continuous prior on the parameter.

The Bayes factor approach solves this problem by allowing us to focus our attention on a comparison of two models: One in which a parameter is forced to take some fixed value (almost always of zero), and one in which it is not (having instead some continuous prior). Comparing a point null hypothesis to a less restricted alternate hypothesis is not the only type of match-up one can use Bayes factors to analyse, but it is the type of match-up that a Bayes factor analysis is particularly useful for examining.

In real research, sometimes this type of comparison will be relevant - i.e., sometimes there will be good reason to treat both a point null hypothesis and a less restrictive alternate as plausible - and sometimes it won't. There do exist experimental manipulations that probably have absolutely no effect on the dependent variable of interest (e.g., in Bem's ESP studies). But there also exist many contexts where exactly-zero relationships are simply implausible. For example, this is true of almost any correlational research in psychology - a correlation between two variables A and B can arise from an effect of A on B, B on A, or via a shared effect of any of a huge array of potential third variables, so it defies belief that any such correlation would be exactly zero in size.

Now obviously, Bayes factor analyses can be used to test hypotheses other than point nulls - e.g., a null interval, or in other words a hypothesis that a parameter is trivially small (rather than exactly zero) in size. But if you're testing a null interval rather than a point null, then it's easy enough to just use Bayesian estimation with a continuous prior for the parameter, and the special utility of an analysis with Bayes factors is obviated. If you aren't actually wanting to test a point null hypothesis, then as Jake Chambers would say:

3. Speaking of which, ROPEs...

As well as Bayes factors, we discuss the approach of Bayesian estimation with a defined Region of Practical Equivalence, as advocated by John Kruschke (e.g., here). This is a really useful approach when there is no reason to believe that a parameter is exactly zero in size, but we want to work out if it's reasonably large (or negligibly small).

4. If you do use a Bayes factor, it's a step on the path, not the endpoint.

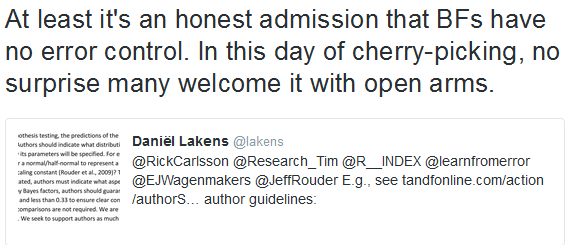

We suggest that a researcher using a Bayes factor analysis needs to select a prior odds for the compared hypotheses, and report the posterior odds (and ideally posterior probabilities), rather than just treating the Bayes factor as the endpoint of an analysis. A Bayes factor alone is not an adequate summary of uncertainty and the risk of error: It provides neither the known long-term error probability that we might get in a frequentist analysis, nor the posterior probability of ``being wrong'' that we can achieve with a full-blown Bayesian analysis (see this earlier post for more on this). Likewise, we urge against interpreting Bayes factors directly using qualitative cut-offs such as BF above 3 = substantial evidence.5. You can test point nulls using Bayesian estimation

Although it'll be a point that's somewhat obvious to a lot of you, we note that it's perfectly possibly to test a point hypothesis (and calculate a non-zero posterior probability for that point hypothesis) when using Bayesian estimation. You can do this simply including a `toggling' parameter that determines whether or not the main parameter of interest is fixed at a specific value, with a Bernoulli prior on this toggling parameter. Doing so admittedly requires a little more programming work, but it's hardly impossible.By emphasising this connection between estimation and Bayes factors, hopefully the rather weird nature of Bayes factors become clear: They're a Bayesian method that implicitly place a large chunk of prior probability on a specific point value, usually of an exactly zero effect. The implicit prior probability distribution for an analysis with a Bayes factor thus looks more like the Dark Tower sitting atop a gentle hill than a non-informative prior distribution. If you don't see a parameter value of exactly zero as being remarkably and especially plausible, maybe a Bayes factor analysis isn't the right choice for you.

6. Got an app with that?

Why yes, we do! Rasmus coded up a nifty web app for us (screenshot below), which allows you to compare two means (as in a t test) while calculating the posterior odds that the mean difference is exactly zero in size (as in a Bayes factor analysis), and also the probability that the difference in means falls within some region of practical equivalence (as in a ROPE analysis). You get to define the prior probability of an exactly zero difference, the scale parameter on the Cauchy prior on effect size under H1, and the width of the ROPE.

The article isn't perfect; one thing that I realised too late in the game to change was that we strike rather too optimistic a tone about how Bayes factors perform with optional stopping. Bayes factors do retain their original interpretation with optional stopping, which jibes up well with our focus on converting Bayes factors to posterior odds and thus interpreting them, rather than using qualitative cut-offs to interpret Bayes factors directly. But when they are used in combination with simple decision rules (e.g., if the BF is greater than 10, I'll support H1), and optional stopping is utilised, inflated error rates can result. We should have included a warning about this. Instead, at this late point, I'll direct you to this blog post by Stephen Martin for more.

I hope that some of you find the article useful, or at least not too egregious an affront to your own proclivities (be they Bayesian, Bayes-factorian, or frequentist). Comments welcome!